As the European Union takes the lead in establishing the world's first state-level artificial intelligence regulations, there's a looming risk that haste may lead to waste. After the lengthy and challenging adoption process of the Act, the focus now shifts to crafting the Codes of Practice for the AI Act. These guidelines are expected in spring next year.

These codes are essential. They will unpack the legal requirements of the AI Act into practical guidelines, serving as a crucial tool during the transition phase. They will help bridge the gap between when obligations kick in for general purpose AI (GPAI) model providers and when so-called harmonised European GPAI model standards are fully adopted. In general, these codes aim to shield people from AI's potential dangers.

The stakes are high. Crafting these rules will not only define how AI is used now but also shape its future development and potential. The Commission's AI office must prioritise collaboration with the technology industry in drafting regulations for general-purpose AI. Companies with a dog in the fight are keeping a close eye on this process, but the ultimate responsibility to produce an effective regulatory framework lies with the EU.

Codes of Practice are highly appealing to major AI companies. In the short term, adherence to these codes will be seen as legal compliance, while non-compliance could lead to hefty fines. Brussels must find the optimal balance between keeping industry voices in the room and handing over too much power to them. Companies like OpenAI and Google have a lot to say, which EU politicians should listen to, but that doesn’t mean they should be allowed to unaccountably control the direction of the policy.

The EU AI Office plans to oversee the process rather than lead it. Instead, consulting firms will handle the drafting. This could mean the Big Four consulting firms end up writing rules alongside their partners, which includes OpenAI and Microsoft.

Although external partners will draft the codes, all stakeholder consultations, agendas, and methodologies require approval from the Commission's new AI Office. Here's the catch: while the AI Office will oversee the process, it won't be deeply involved beyond approving the final codes. This allows for subtle advocacy and lobbying efforts to refine the codes without necessitating a complete overhaul.

Of course, this isn’t the first time the Commission has operated like this. The consulting firm drafting the codes is hired under a framework contract. Firms compete in a public tender and are pre-selected for general tasks, such as helping Commission services with audits. For example, in 2018, the EU Commission collaborated with major digital platform providers to develop a code of practice for handling disinformation. However, concerns about industry bias and reporting issues persist, raising questions about their commitment to compliance.

It has been announced the AI Office will bring together AI providers, authorities, and other stakeholders to help craft these guidelines. However, if the drafting process for the General Purpose AI Codes of Practice isn’t inclusive, incorporating civil society, EU-based companies, academics, scientists, and independent experts, it risks becoming only an industry-driven endeavor.

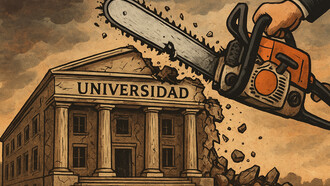

This essentially means tech giants could end up writing their own rules, which would ultimately be bad for them, their European rivals, and their users. The EU AI Office needs to prevent this, but there's another issue: concerns about the office itself. In June, MEPs from three different parties, who were re-elected, questioned the procedure for selecting the leader of the AI Office. Some MEPs initially pushed for the AI Office to operate somewhat independently, but ultimately, it was decided to integrate it into the Commission.

Meanwhile, concerns about political and corporate influence surrounding the EU's AI Act have already been raised by 34 leading civil society organisations. They are calling on the Commission to ensure that any authority appointed to enforce the AI Act remains independent.

Rapid technological advancements, mostly driven by the private sector, often outpace regulators, leaving them dependent on information from the developers. This dynamic makes co- and self-regulation essential complements to traditional regulation.

The absence of diverse expertise from civil society in shaping standards highlights critical gaps in AI regulation, raising concerns about safeguarding fundamental rights. Greater transparency and inclusivity are essential in risk identification and code production methodologies, advocating for approaches that embrace a broad spectrum of skills and perspectives.

The involvement of diverse groups under the AI Act underscores the urgent need for reformed standard-setting processes. To make AI regulation fair, responsive, and democratically sound, inclusivity is key. Outsourcing this process risks poor results. While industry involvement is essential, the EU must draw a clear line to manage potential risks and ensure a balanced approach that benefits all.

This article was written by Irakli Machaidze. Irakli is a Georgian political writer, analytical journalist, and fellow with Young Voices Europe. Irakli is currently based in Vienna, Austria, pursuing advanced studies in international relations. He specialises in EU policy and regional security in Europe.